GAMP Forum: SaaS, AI/ML and BlockChain in Regulated Environments

- Posted by Heather Longden

- On January 29, 2020

At the recent GAMP Forum sponsored by the ISPE Boston Area Chapter, multiple presenters from across the US, all active members of the ISPE GAMP Community of Practice (COP), met to discuss computer system validation (CSV) or Computer Software Assurance (CSA).

First, a bit of background: As the regulated medical device and drug industry struggles to adopt new technology and automation, the FDA, specifically the CDRH medical device arm of the FDA, put together a project team in 2016 to investigate the challenges that the industry was facing in this respect. It was no surprise that one of the root causes of the failures to adopt innovative new technology, even accepting new versions of software already deployed and in use, was the perceived “validation burden.” In response, the CDRH assembled a team of industry experts, consultants and FDA members to draft a guidance on how to address this, leveraging the risk based approach to validation which has been a part of GAMP 5 for over 10 years. While the draft guidance was not available at the time of the forum, it is expected to be released by the end of 2020.

The GAMP Forum plenary presentation was given by a panel of validation experts (Shana Dagel-Kinney, Ken Shitamoto and Khaled Mousally) who have been directly involved in the CDRH Case for Quality initiative, either as a member of the FDA team or by adopting the principles of CSA in anticipation of the release of the draft guidance.

The panelists were able to give insight into the expected content and emphasized that effort of validating systems should be commensurate with the risk to patient safety that might result from failures of the system. Spending more time in thinking about the criticality of the system for business and patient safety, potential risks and how they might be controlled should focus any documented scripted testing on high risk systems only and, within those, high risk functionality.

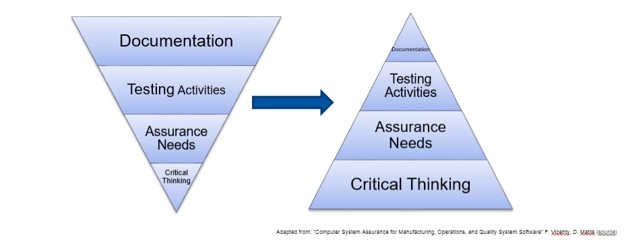

Using unscripted testing for user acceptance is also a key aspect along with leveraging the testing completed by the vendor of the application. This figure emphasized for me the paradigm shift that this initiative is proposing:

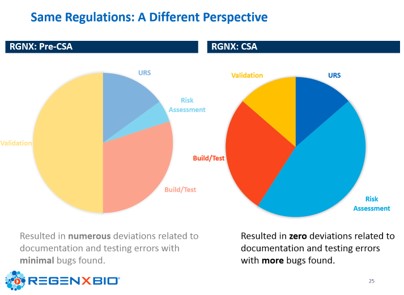

RegenXbio and Gilead have both brought these concepts of spending more time and effort thinking about the impact of the system, verifying the quality systems of their vendors and performing a proper risk assessment of functionality, not simply to tick a box but to shape their software validation scope. This assessment results in faster time to deploy new or updated systems, minimizes the documentation and provides significant business savings, while reducing errors and deviations and delivering more confidence in the use of computerized systems, as shown below:

Following the plenary session, the program split into two tracks: one continuing the theme of risk assessment in system validation and the other exploring how new technologies might be used in regulated life science environments. Lorrie Vuolo-Schussler, chair of the GAMP Americas steering committee began the CSV track explaining why we validate software, who should be involved, how to approach the critical thinking part of validation through data and process mapping and describing an approach to performing risk assessments. As well as sharing how many regulatory guidances discuss risk assessment and how it is a key part of many ISPE GAMP guidances, Lorrie offered four keys to successful system validation and, yes, one of those was certainly risk assessment.

Following the plenary session, the program split into two tracks: one continuing the theme of risk assessment in system validation and the other exploring how new technologies might be used in regulated life science environments. Lorrie Vuolo-Schussler, chair of the GAMP Americas steering committee began the CSV track explaining why we validate software, who should be involved, how to approach the critical thinking part of validation through data and process mapping and describing an approach to performing risk assessments. As well as sharing how many regulatory guidances discuss risk assessment and how it is a key part of many ISPE GAMP guidances, Lorrie offered four keys to successful system validation and, yes, one of those was certainly risk assessment.

James Hughes then discussed how, at bluebird bio, on-premises regulated computerized systems are mostly used today for laboratory systems only, with cloud based Software as a Service (SaaS) multi-tenant applications accounting for over 95% of all other software applications. He explained how bluebird uses tools in the GAMP infrastructure guide to perform risk assessments of different deployment types and emphasized that proper verification of vendors is critical to understanding residual risks and additional controls you might need to put in place.

This topic was followed by Steve Ferrell of Compliance Path, who was an author of the aforementioned GAMP infrastructure guide and interacts regularly with the FDA on the topic. Steve’s presentation brought the expectations of the cloud back to earth. While SaaS and other cloud models make great business sense, compliance and data integrity cannot be compromised and cloud deployments do little to benefit compliance. Risk assessments need a thorough understanding of the new risks to data introduced by cloud deployments, along with an imperative to verify and trust vendors to a new level. Auditing is key and while some ‘certificates’ can be useful indications that the vendor understands life science regulations, not all certifications are equal.

Steve then walked the audience through some of the 180 active controls of the FedRamp guide, based on NIST SP 800-53 which can guide not only an audit for data integrity or compliance to predicate rules but also the service level agreement and validation approach.

Meanwhile, on the technology track, Eric Staib and James Canterbury shared their experiences using Artificial Intelligence (AI) and Machine Learning (ML) in regulated environments and the potential of BlockChain. To begin, Eric introduced the concepts of AI, ML and deep learning and emphasized the importance of clean and accurate data sets, not only to ensure “fairness” of algorithms during normal use but also, critically, for training the algorithms and performing algorithm validation.

He then discussed how a typical system lifecycle, while different from a traditional application, should still follow similar expectations: requirements, specification, source data, validation, output accuracy and verification scripts are still needed to show that the applications is working and can be trusted. (Anyone wanting to learn more should consider joining the GAMP Special Interest Group on Software Automation and Artificial Intelligence.)

James next tackled the topic of BlockChain and the principles of what the technology offers. As well as providing a practical demonstration of the concept of an independent ledger, and a live demo of the BlockChain in action on Raspberry Pi computers monitoring “non-fungible tokens,” he offered a really useful five-point test for viability to answer the question, “Do you need a blockchain for that?” One area where the answer is “yes” in the life science world is tracking inventory. This might be for supply chain monitoring, detection of counterfeit products and for recall purposes. One such current implementation is the MediLedger Network which will verify more than 90% of all drugs sold in the US and involves over 800 licensed manufacturers, over 100 wholesalers and more than 15 different solution providers to track serial numbers.

0 Comments